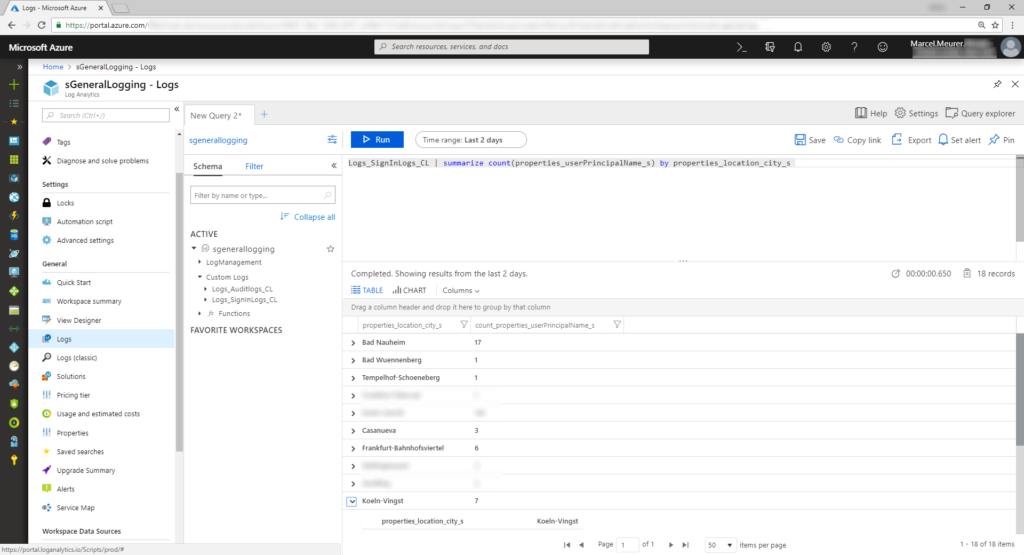

Logging Azure AD audit and sign-ins to Azure OMS Log Analytics

Azure OMS Log Analytics is often used by Azure services. Unfortunately, Azure AD audits and sign-ins are not configurable for log analytics now (I bet this will change soon). But for now, we must work around to archive this.

My favorite way is:

Sending audit logs and sign-ins to event-hubs -> collecting event-hub data from Logic Apps -> transferring data to Log Analytics

Step-by-step

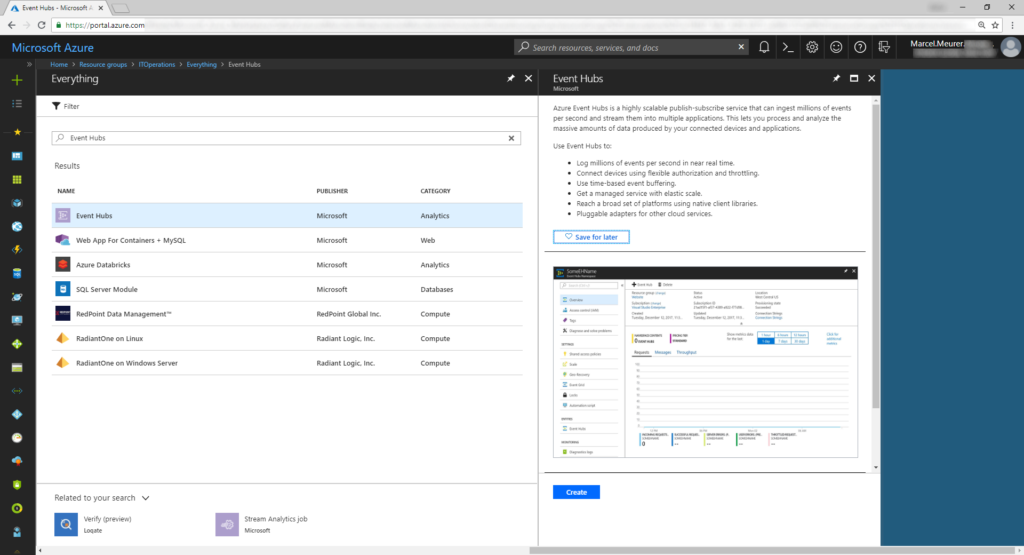

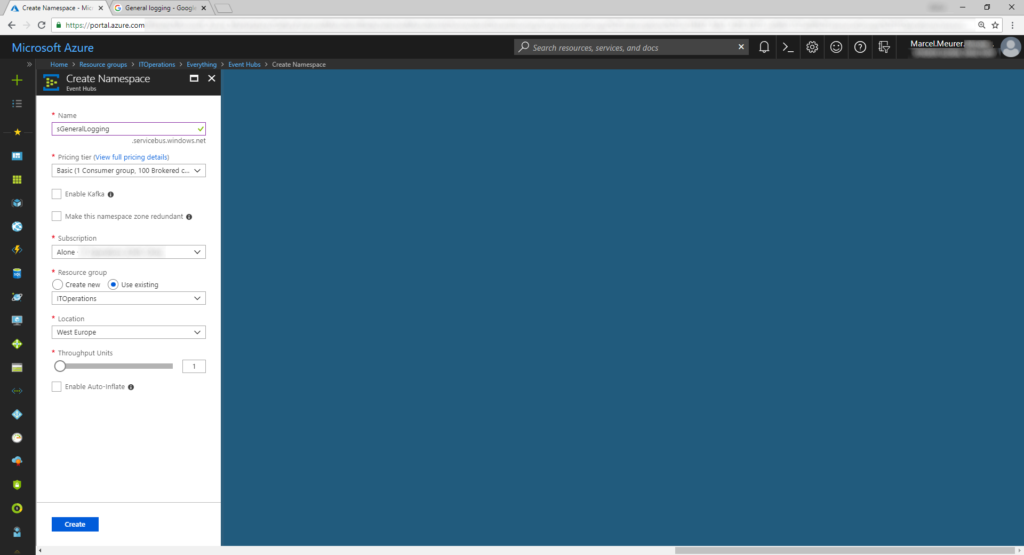

Creating an Event Hub namespace

Create a new event hub namespace in Azure with the Azure Portal. Add “Event Hubs” and give a unique name.

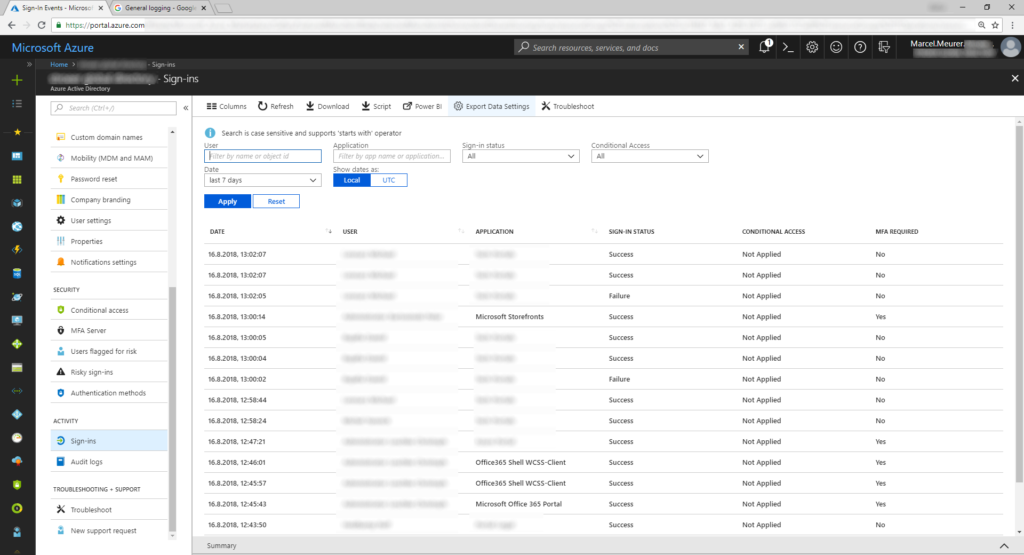

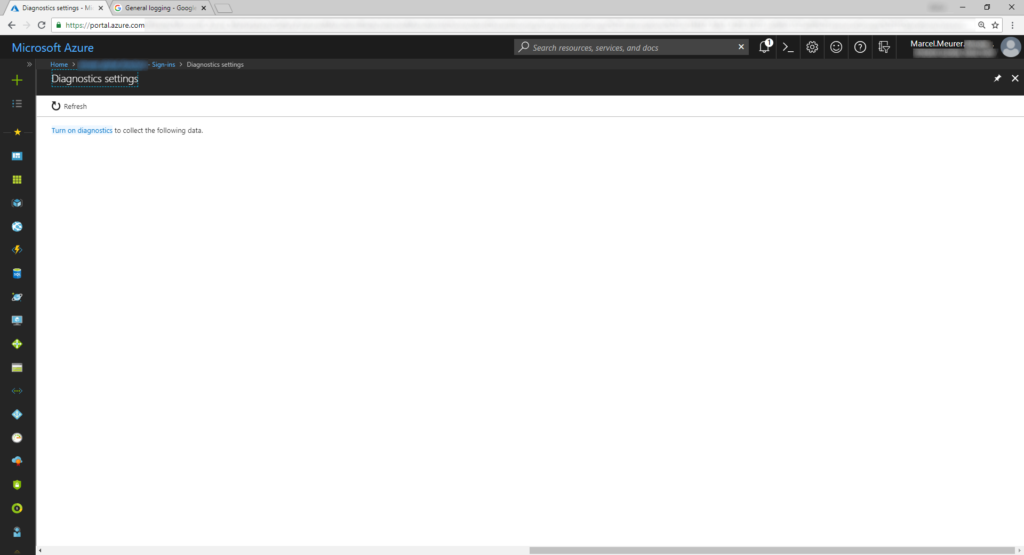

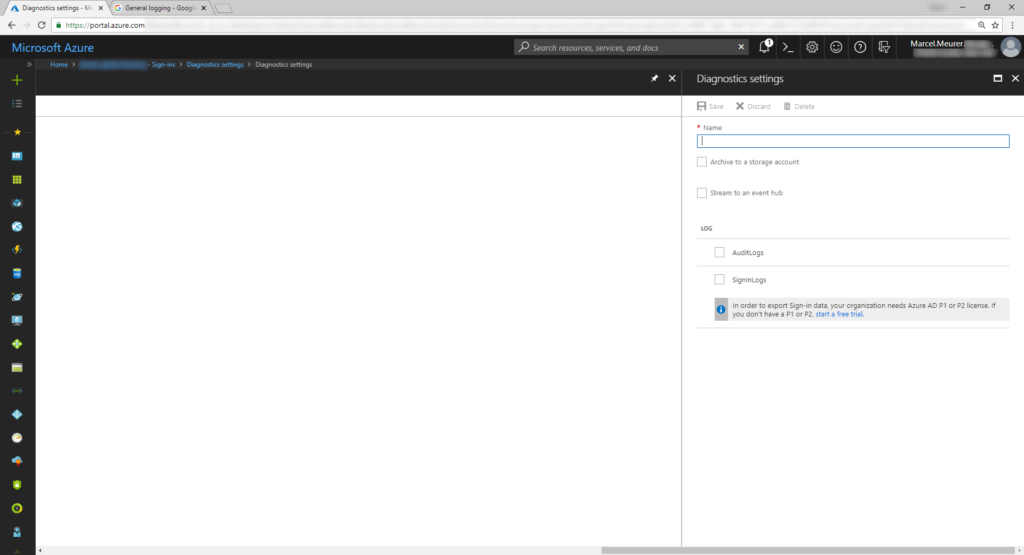

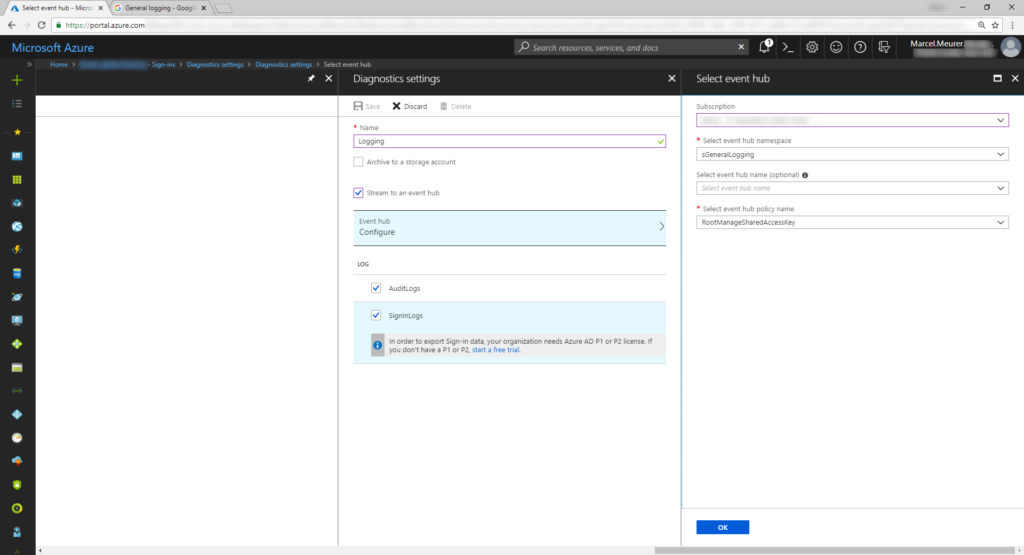

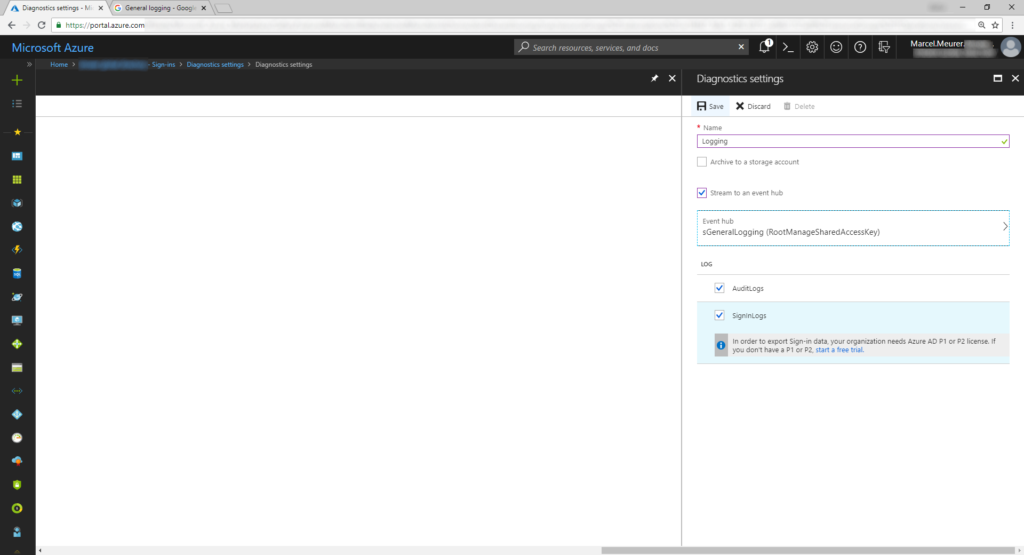

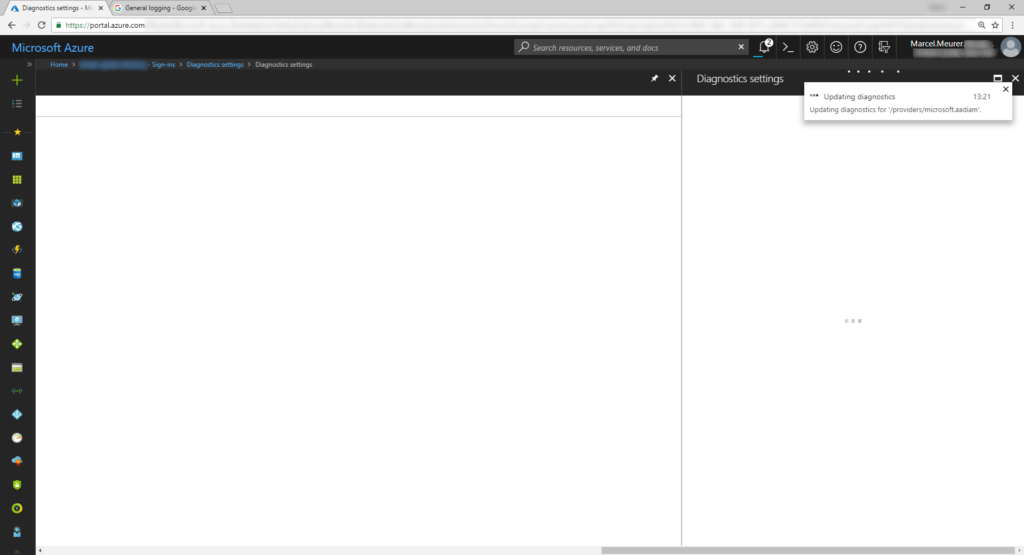

Configure Azure AD diagnostics

Use the portal and navigate to Azure AD -> Audit logs. Select “Export Data Settings” and “Turn on diagnostic”. Give the logging configuration a name and select “Stream to an event hub” and both logs (Audit and Sign-in). In event hub -> configure choose the previously created name space. Leave the optional point blank and select the default event hub policy.

Hint: To get sign-in logs you need premium licenses

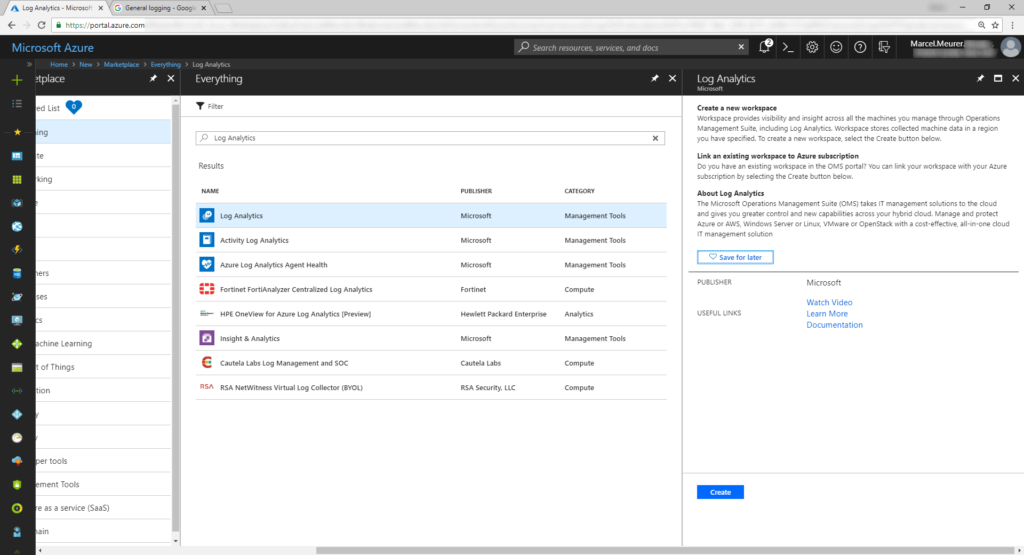

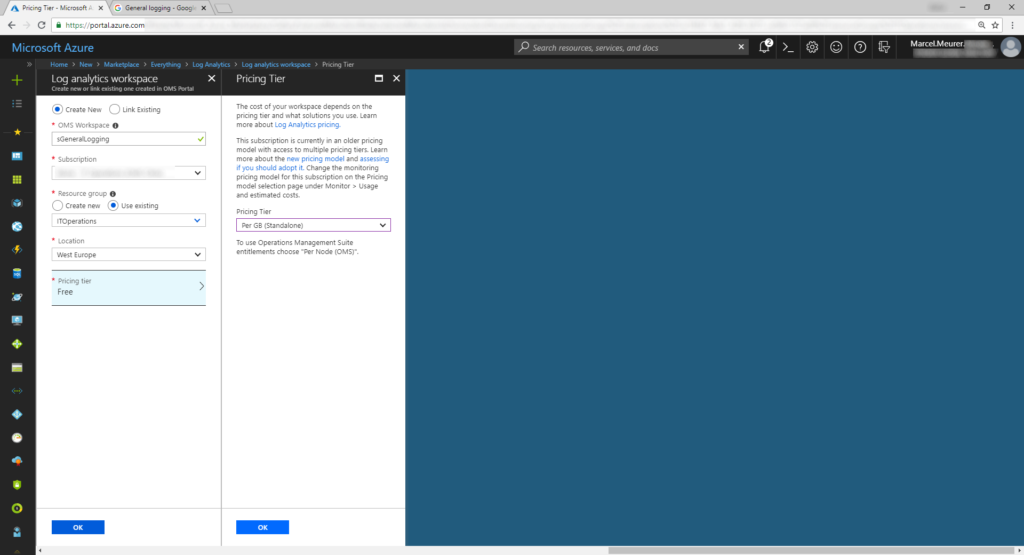

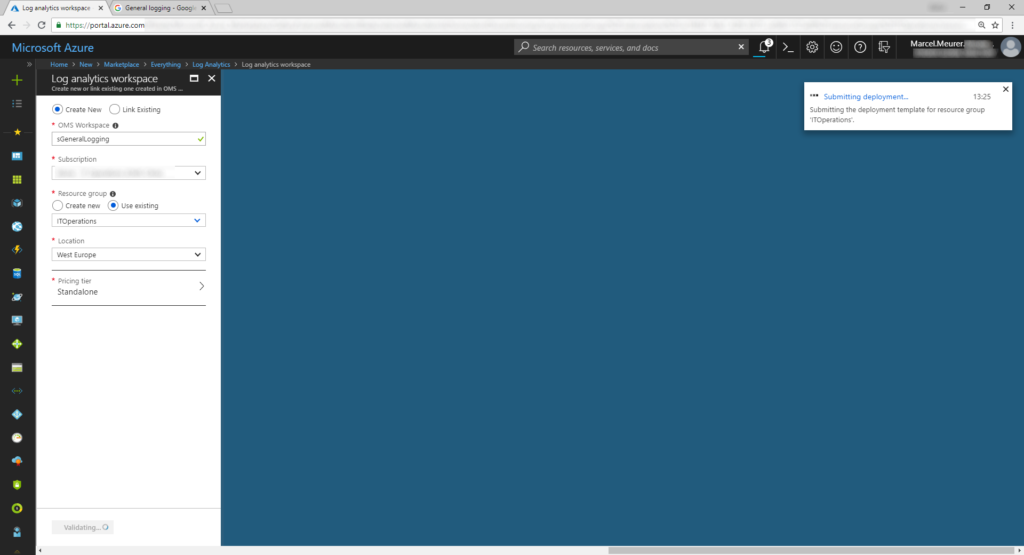

Create a Log Analytics workspace

In Azure portal add “Log Analytics” and give it a unique name. Select the right pricing tier for you (you can start with free).

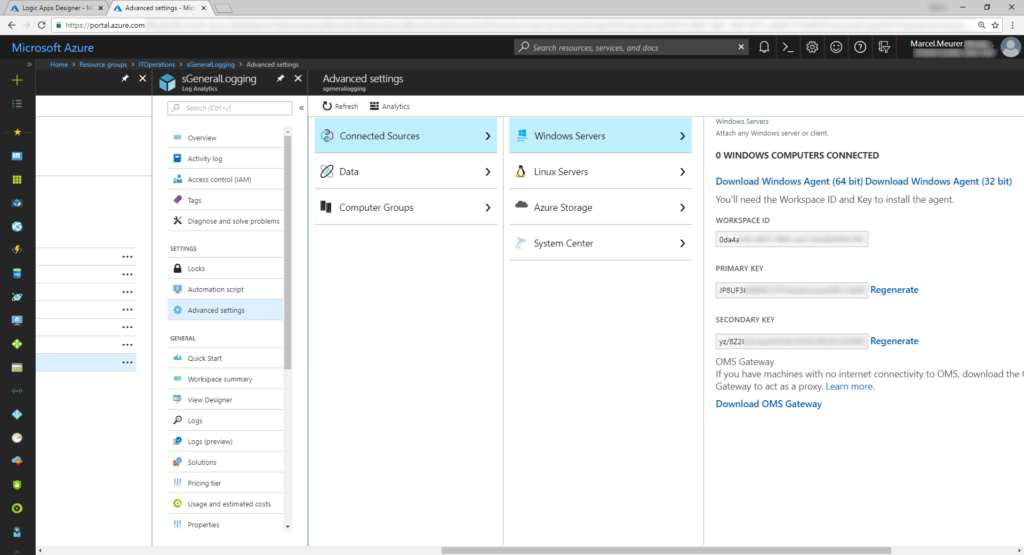

Open the deployed workspace and navigate to Advanced settings -> Connected Sources -> Windows Servers and copy the workspace id and primary key for later.

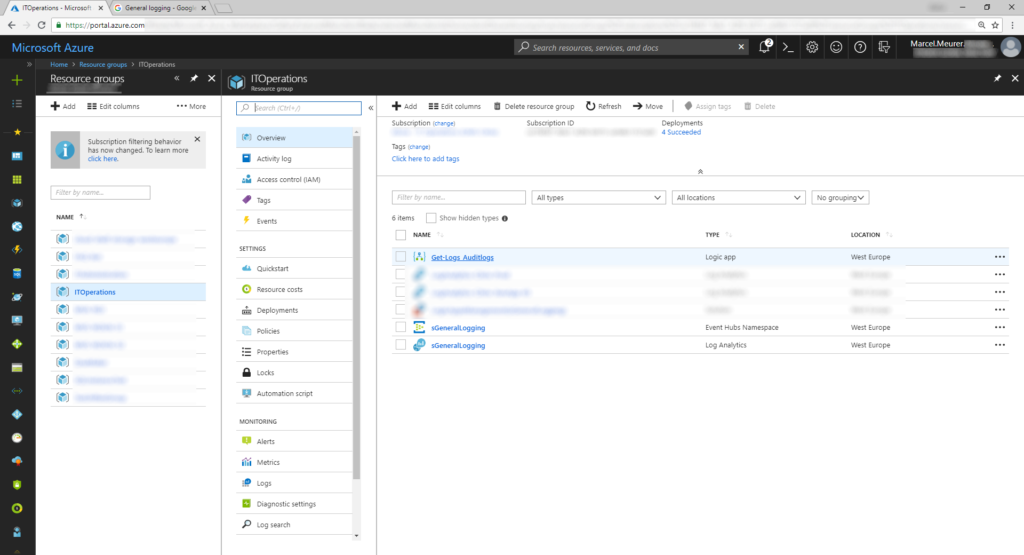

Finally create two Logic Apps

The logic app should get the log data from the event hub and send it to your log analytics workspace.

First start with a logic app for the audit logs.

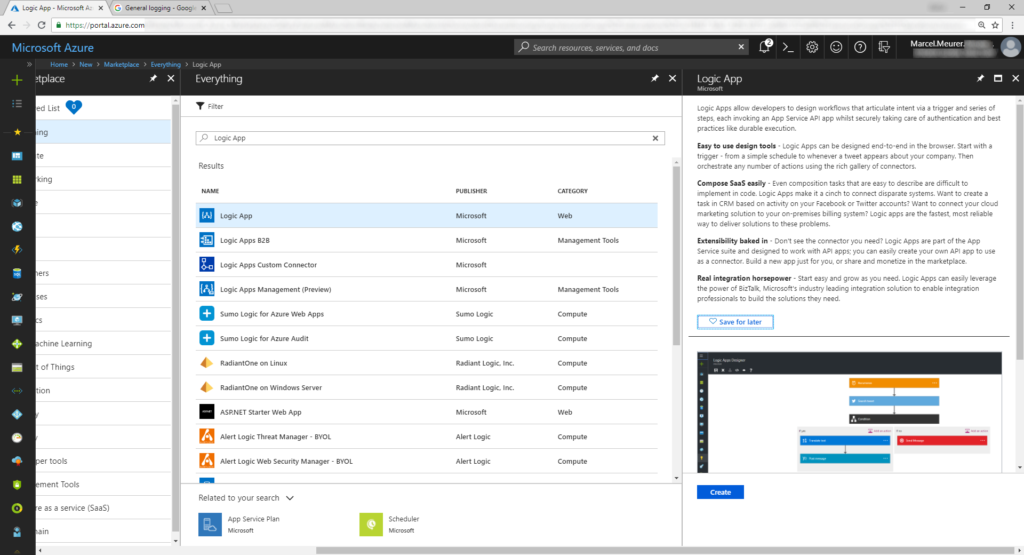

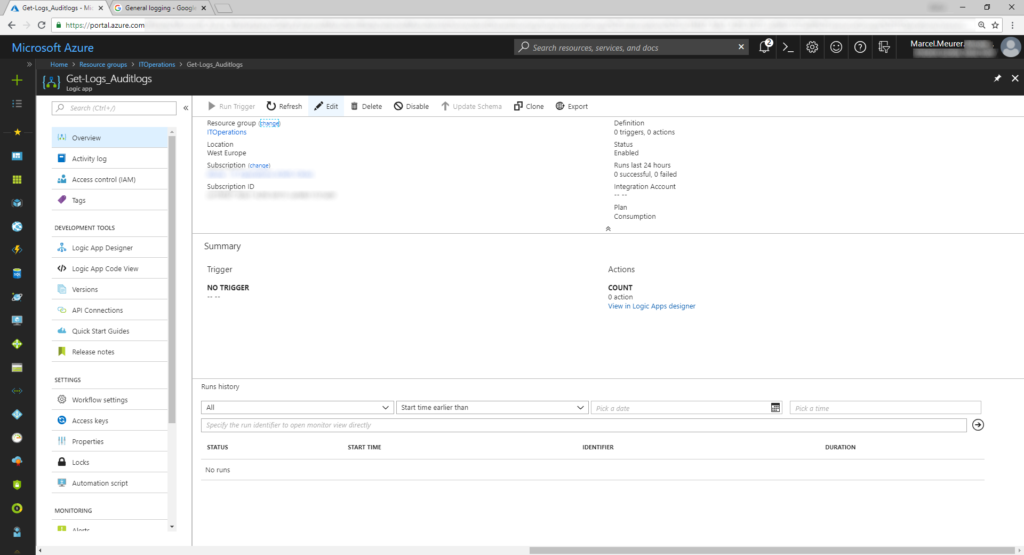

Got to the portal and add a “Logic App”.

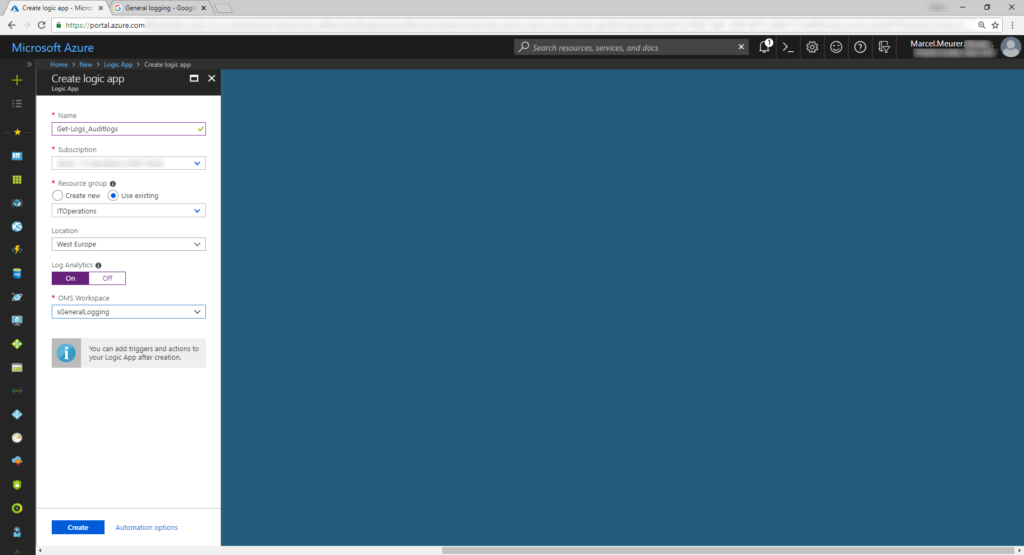

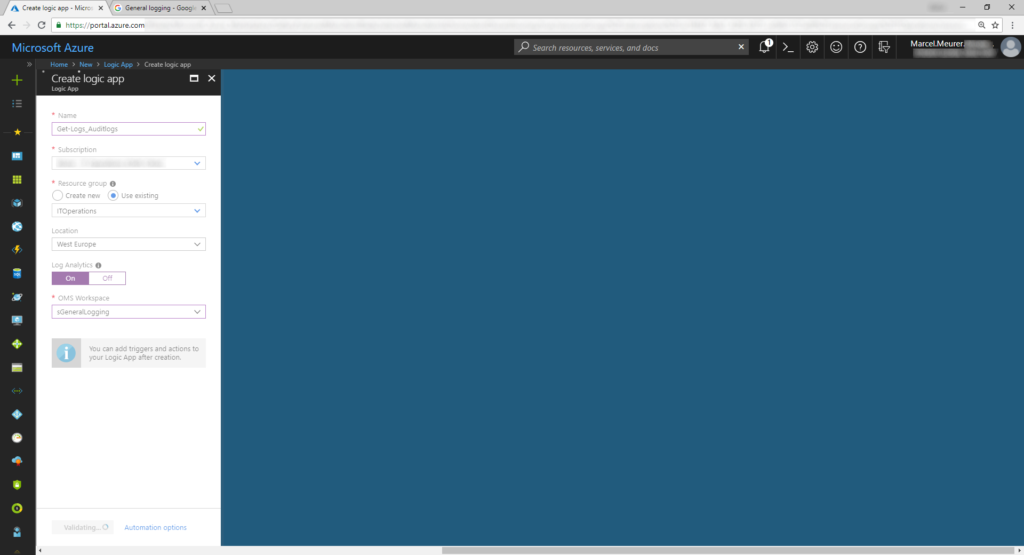

Give the logic app a common name. You can send log data from the logic app itself to your workspace.

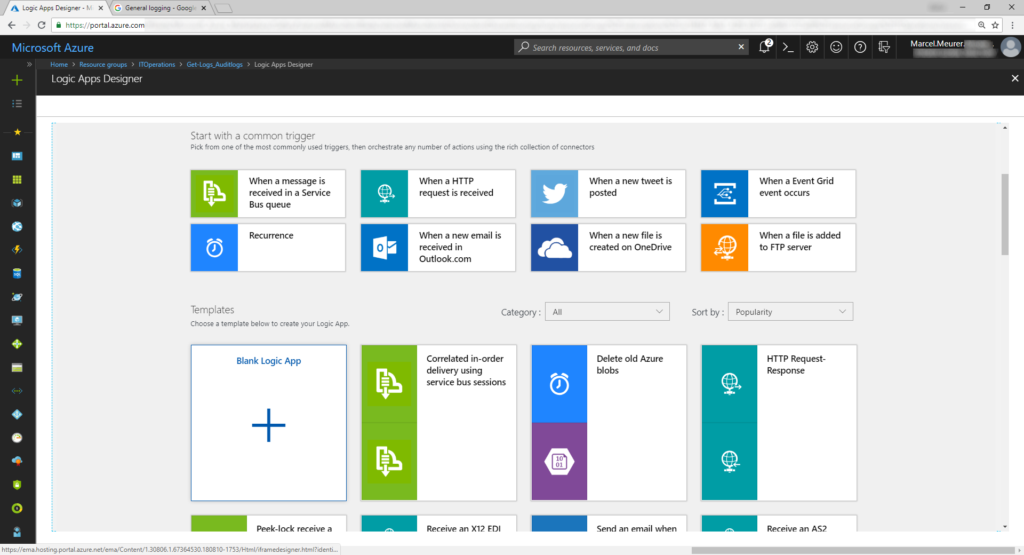

Go to the created logic app and select edit and add a blank app.

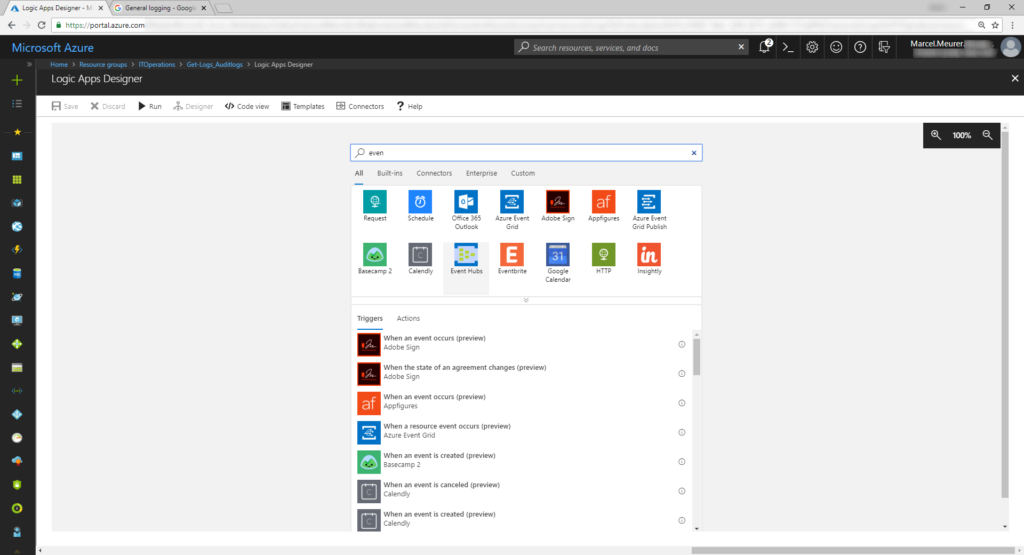

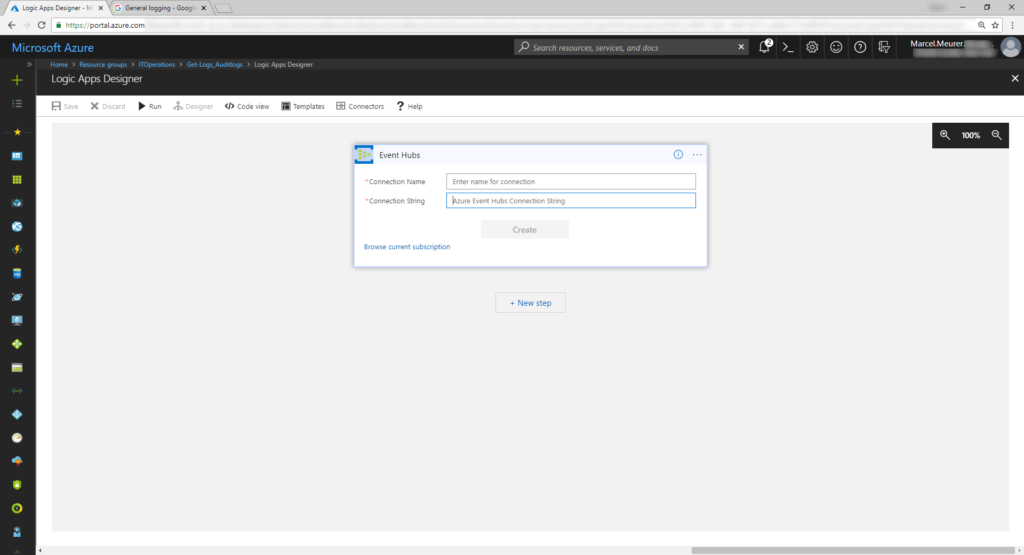

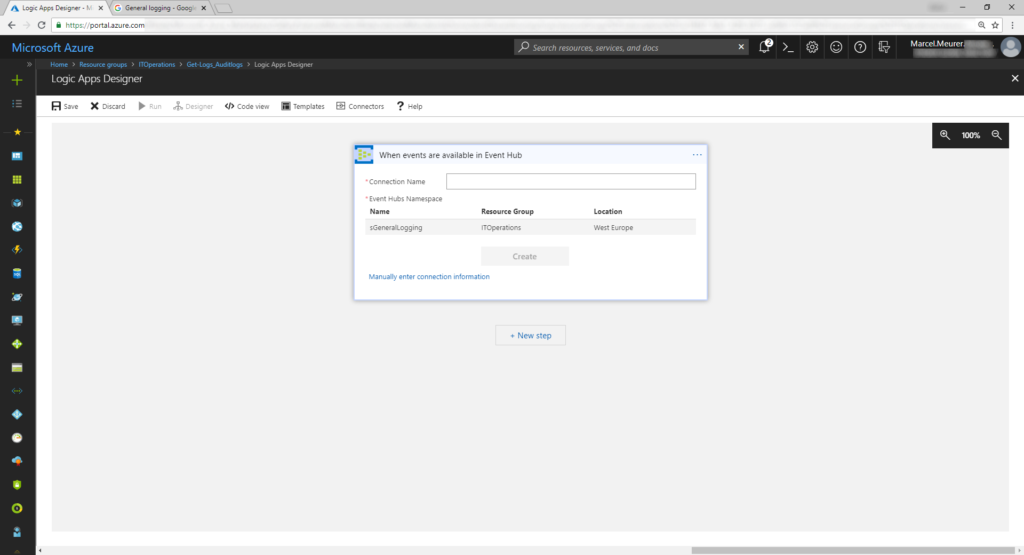

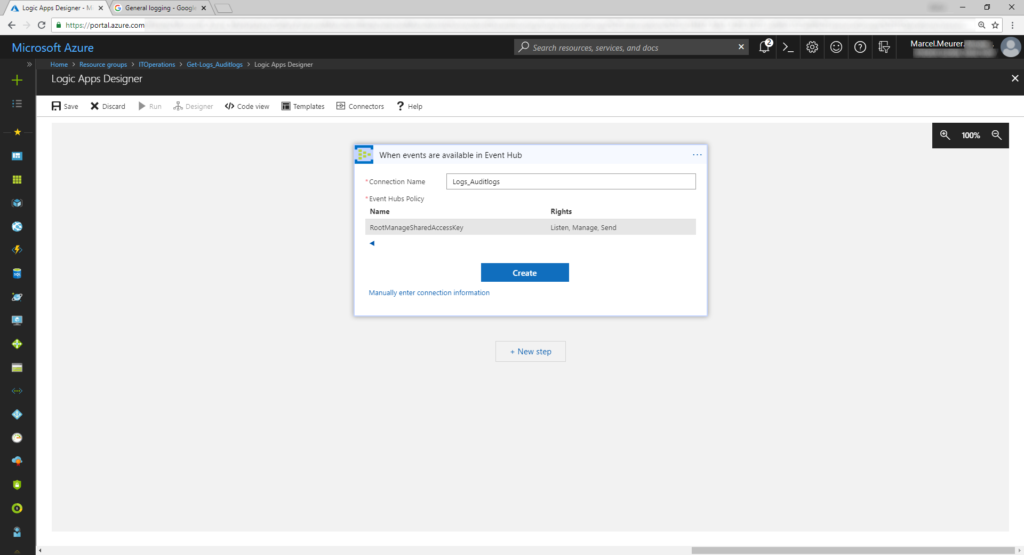

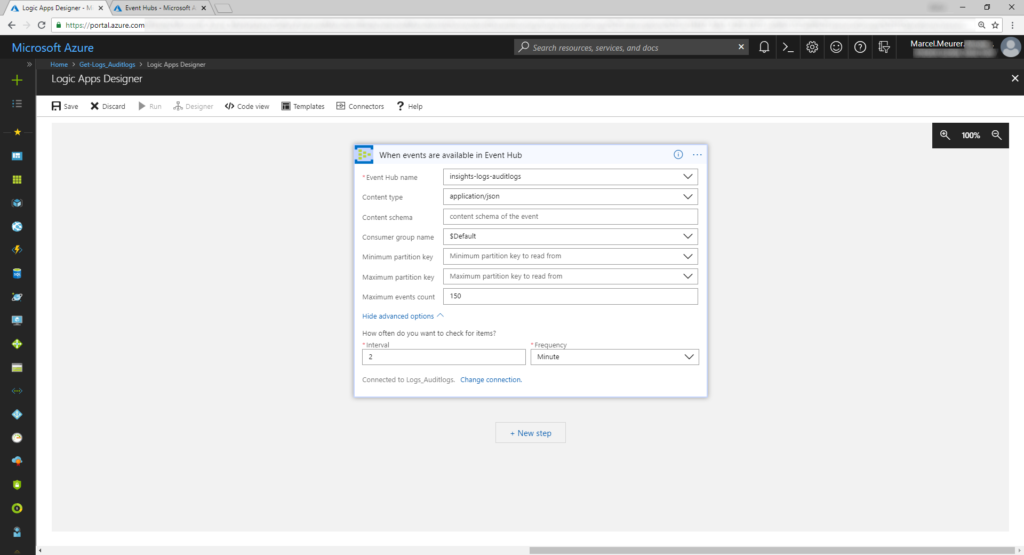

Add „event hubs“ as trigger and configure the connection. You can browse your subscription to find the namespace and the event hub itself. Give your connection a name.

Hint: The event hub is visible after Azure AD created it (will be created after new log data are available).

Configure content type to „application/json” and type 150 to the maximum events count field. Try to get new data from the event hub every 2 minutes (or choose the value that suits you best).

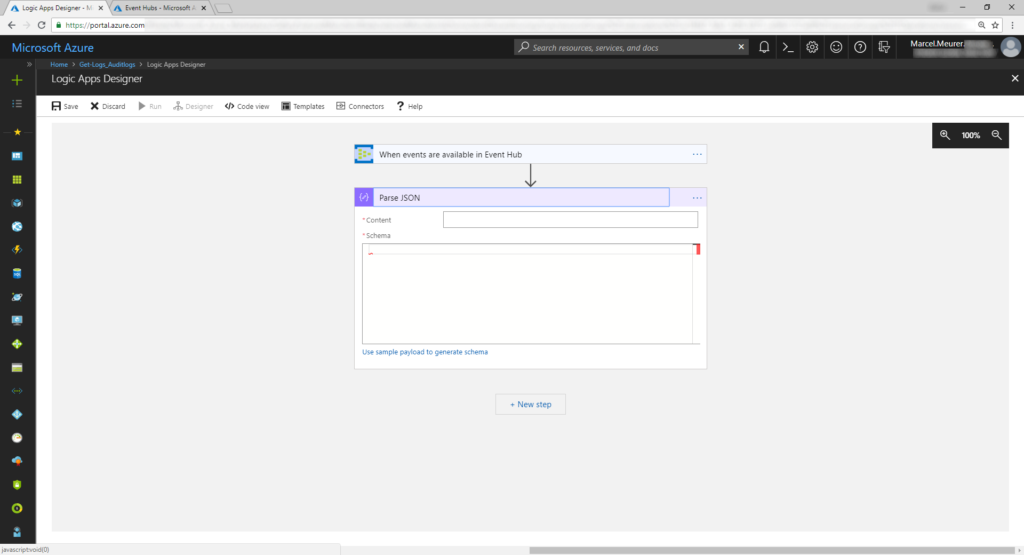

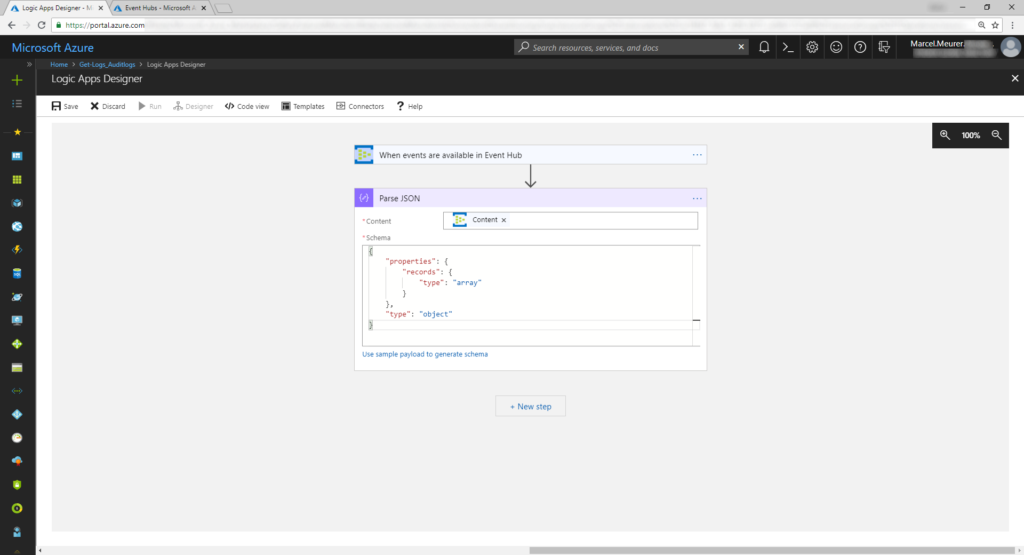

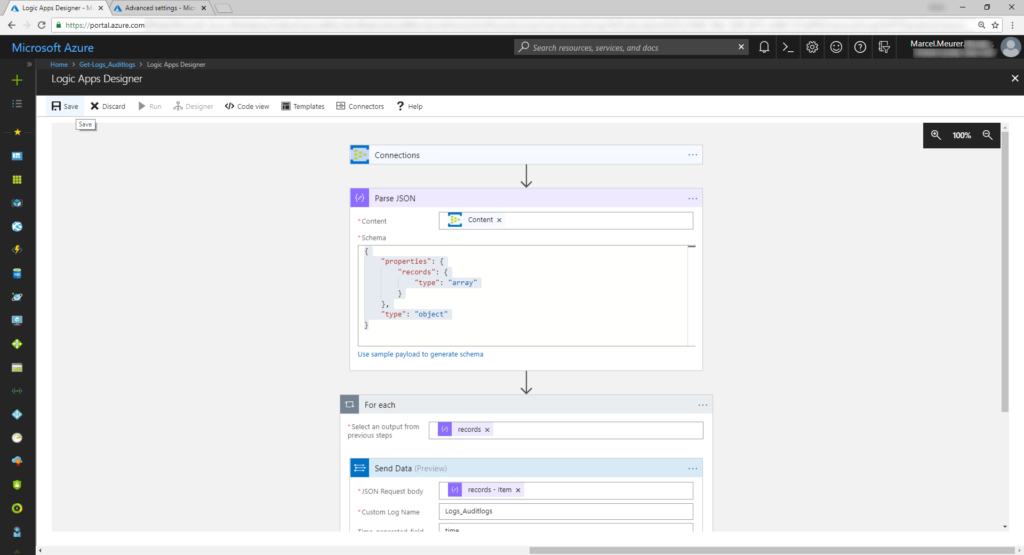

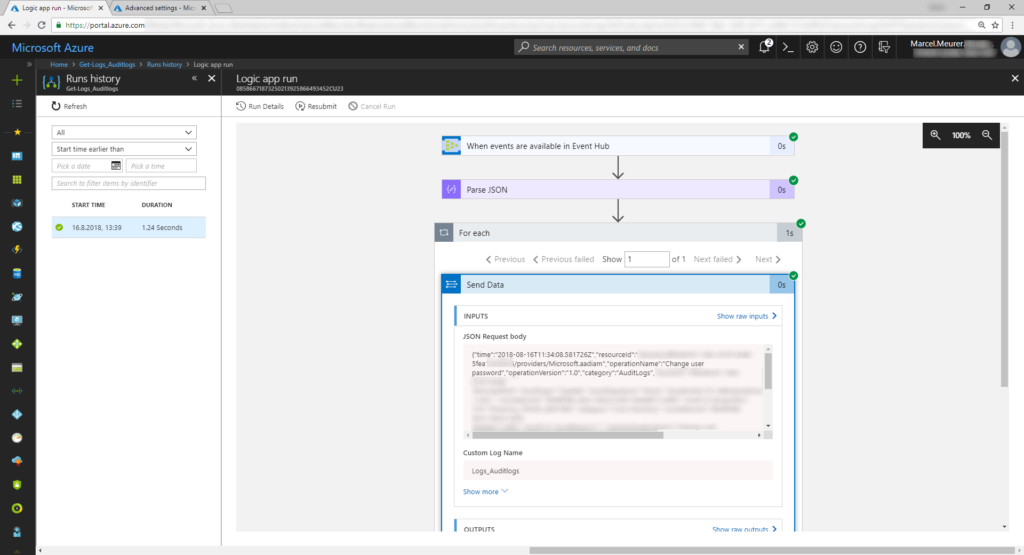

Next: Parse the data from the event hub by adding “Parse JSON” to your logic app.

Use „Content“ as input and this schema:

{“properties”: {“records”: {

“type”: “array”

}

},”

type”: “object”

}

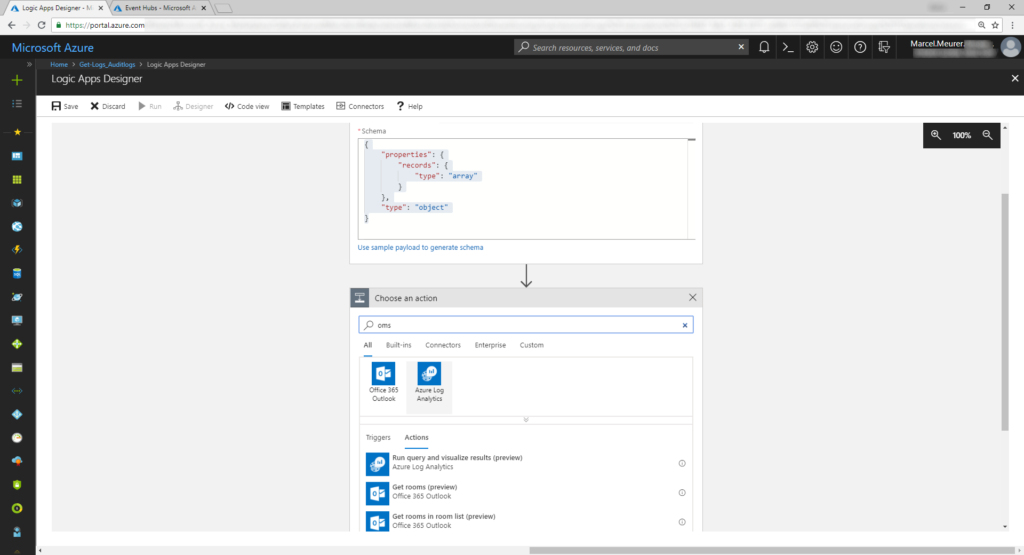

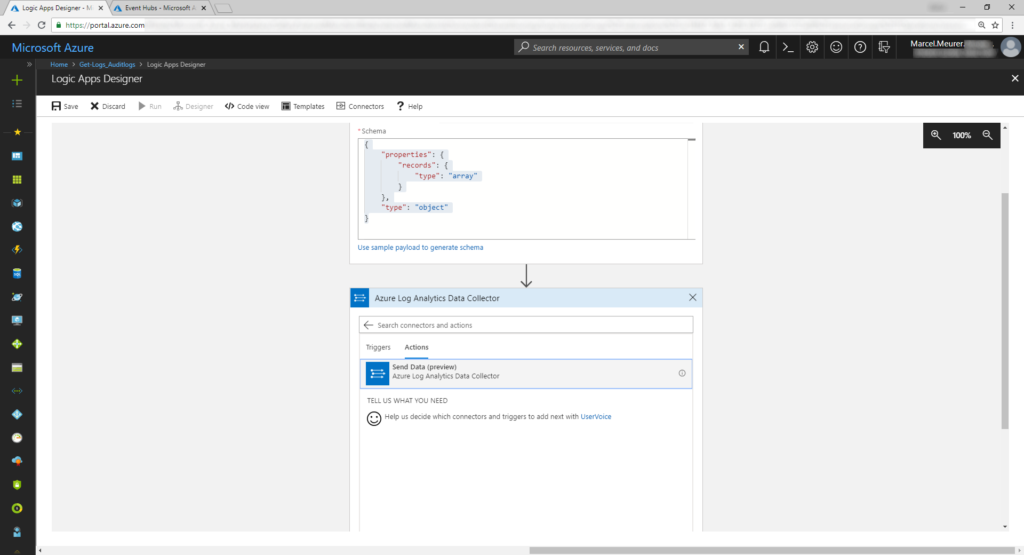

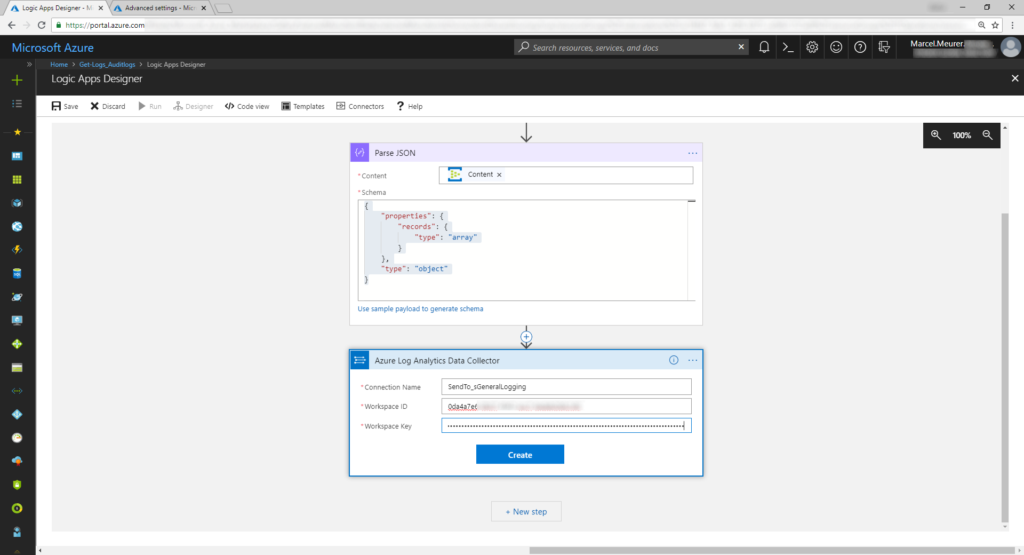

Add „Azure Log Analytics / send data“ as new action.

Configure the connection by entering the workspace id and primary key from above.

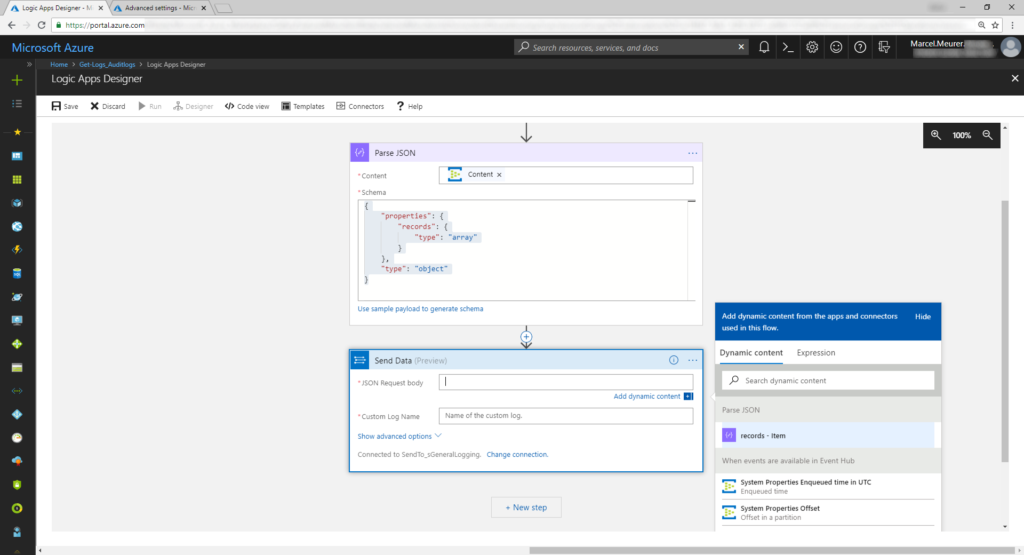

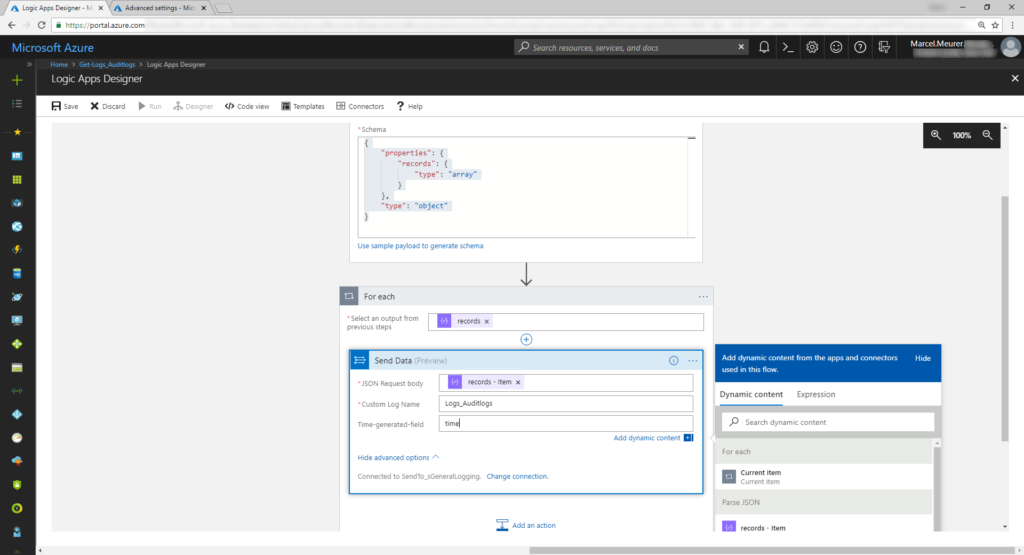

Select “records“ item as input (JSON Request body). While records is an array it will be automatically embedded in a for-each loop.

Insert “time” in the time-generated-field and select a custom log name (now: Logs_AuditLogs, later Logs_SignInLogs (do not use “-“ in the log name)).

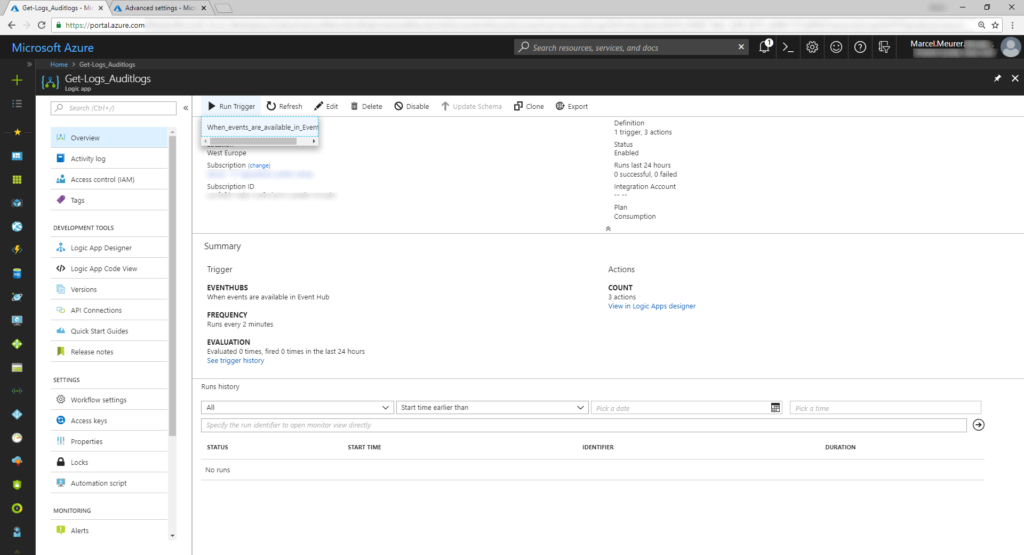

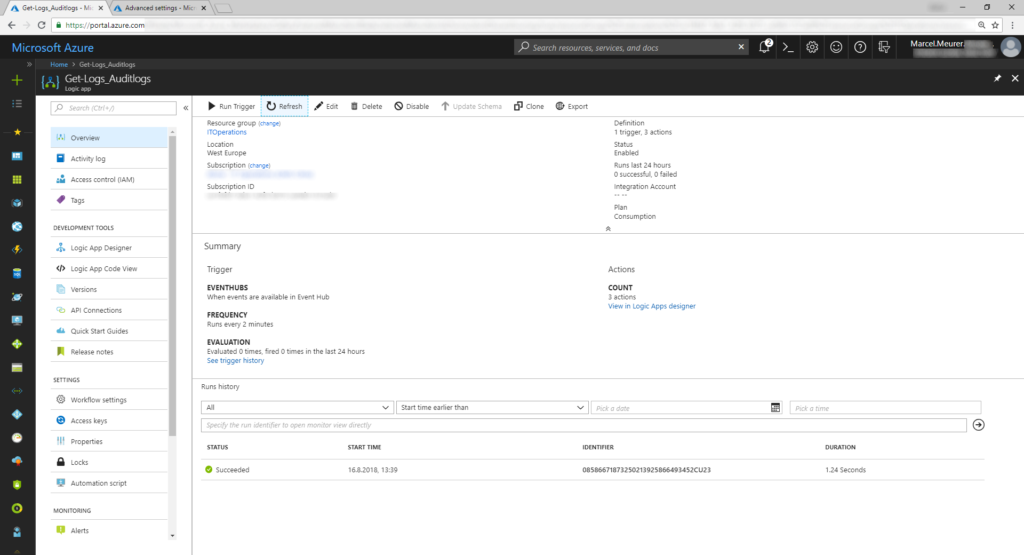

Save the logic app and run the trigger manually once.

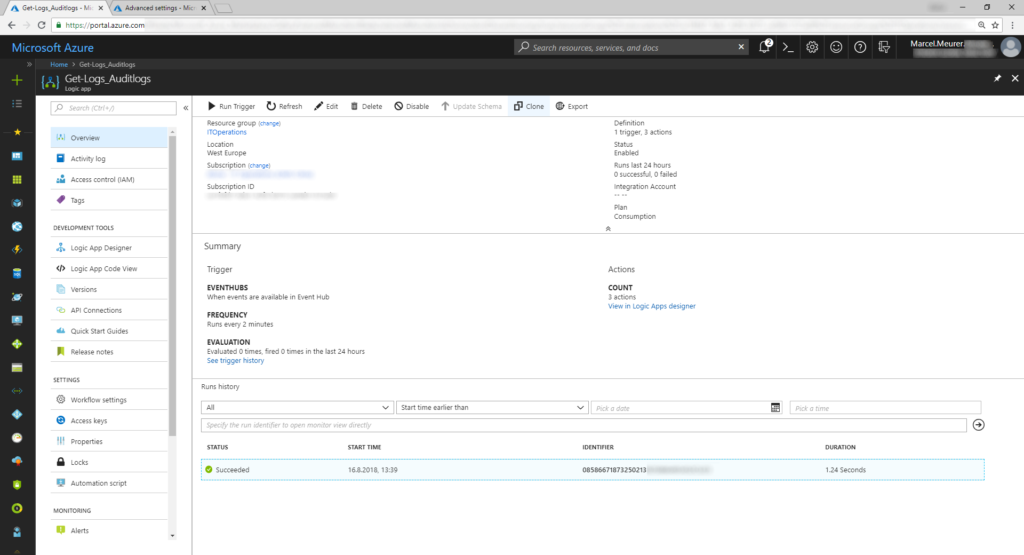

You can see what happens if the logic app gets event from the hub.

Repeat all steps for the second logic apps collecting sign-in logs.

After first sending data to the log analytic workspace it takes about 30 minutes to build the data schema. Later data will arrive app. 5 minutes delayed in your workspace.